AR assistant

SKILLS:Concept Development,Programming(C#),User Experience

SKILLS:Concept Development,Programming(C#),User Experience

AR application 2016

- Abstract

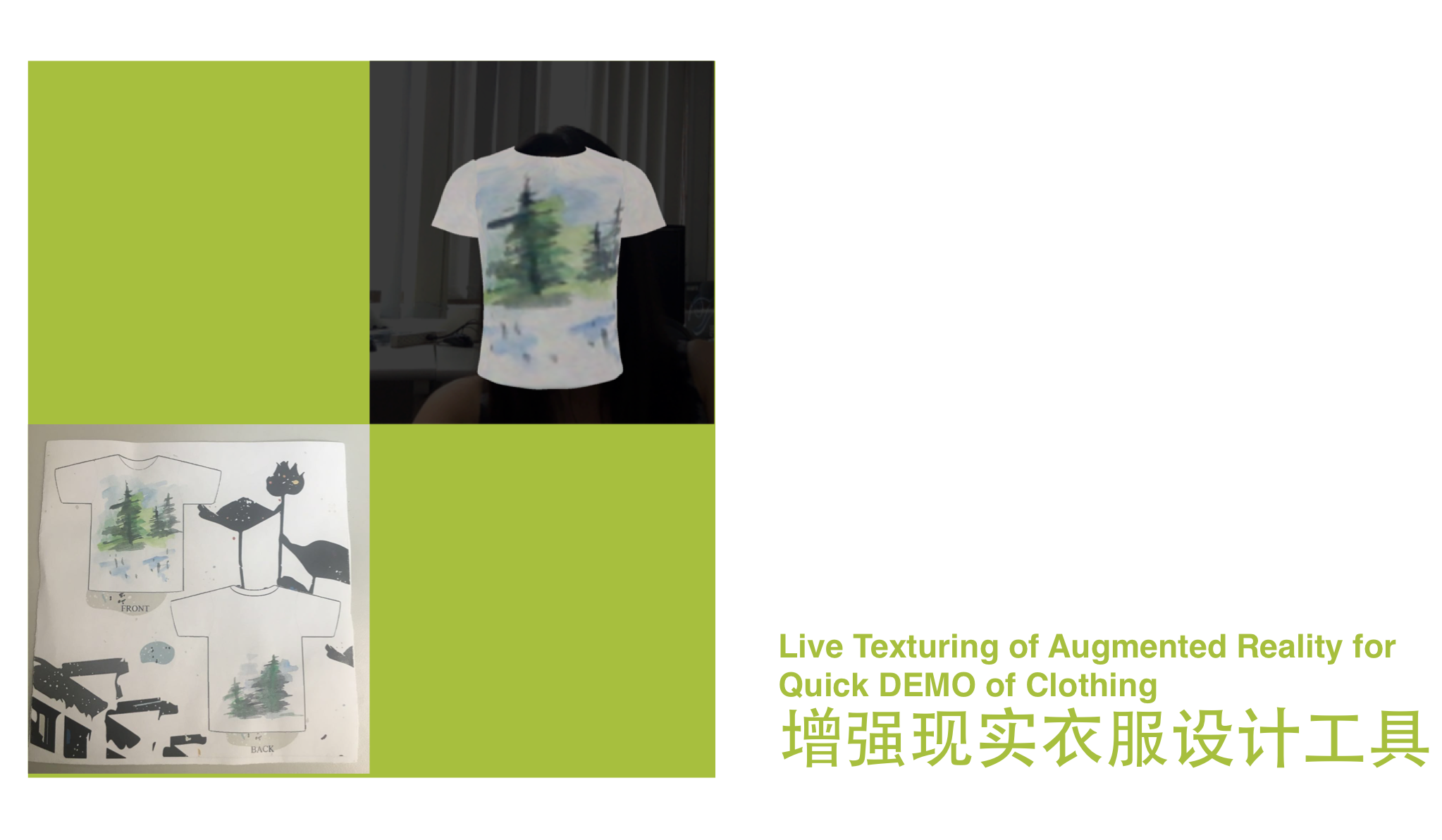

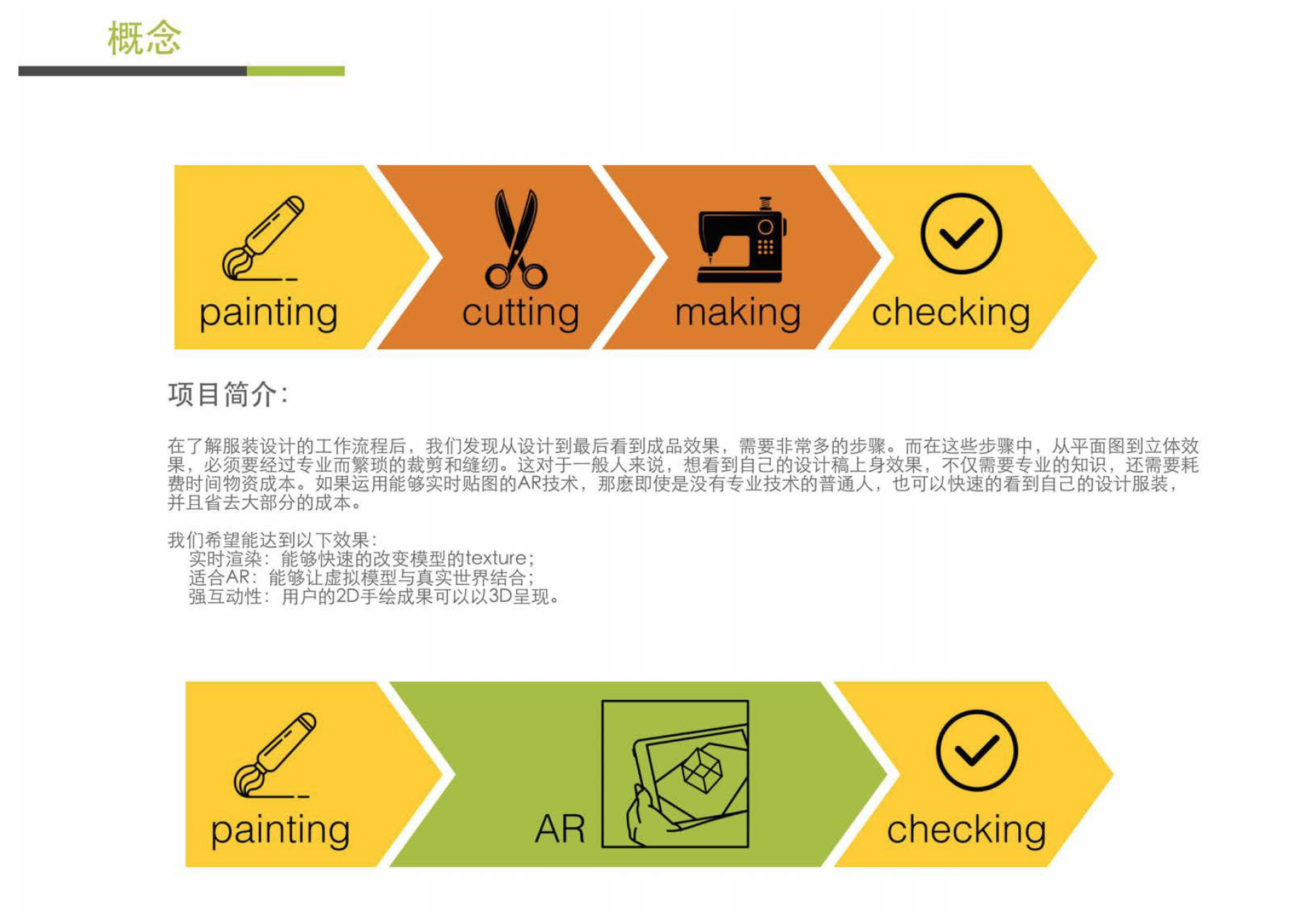

We found the process of costume design quite complex, which includes a great deal of steps from initial designing to delivering final product. Turning graphic designs into real clothes not only costs time and materials, but also requires cumbersome steps such as cutting and sewing. However, by taking advantage of real-time AR drawing technology, people without professional skills can design costumes on their own with relatively low costs.

We hope to achieve the following results:

Real-time rendering: quickly change the texture of a model

Suitable for AR: virtual model can be applied to real-world scenarios

Strong Interactivity: user’s hand drawings can be presented by a 3D model

- Implement

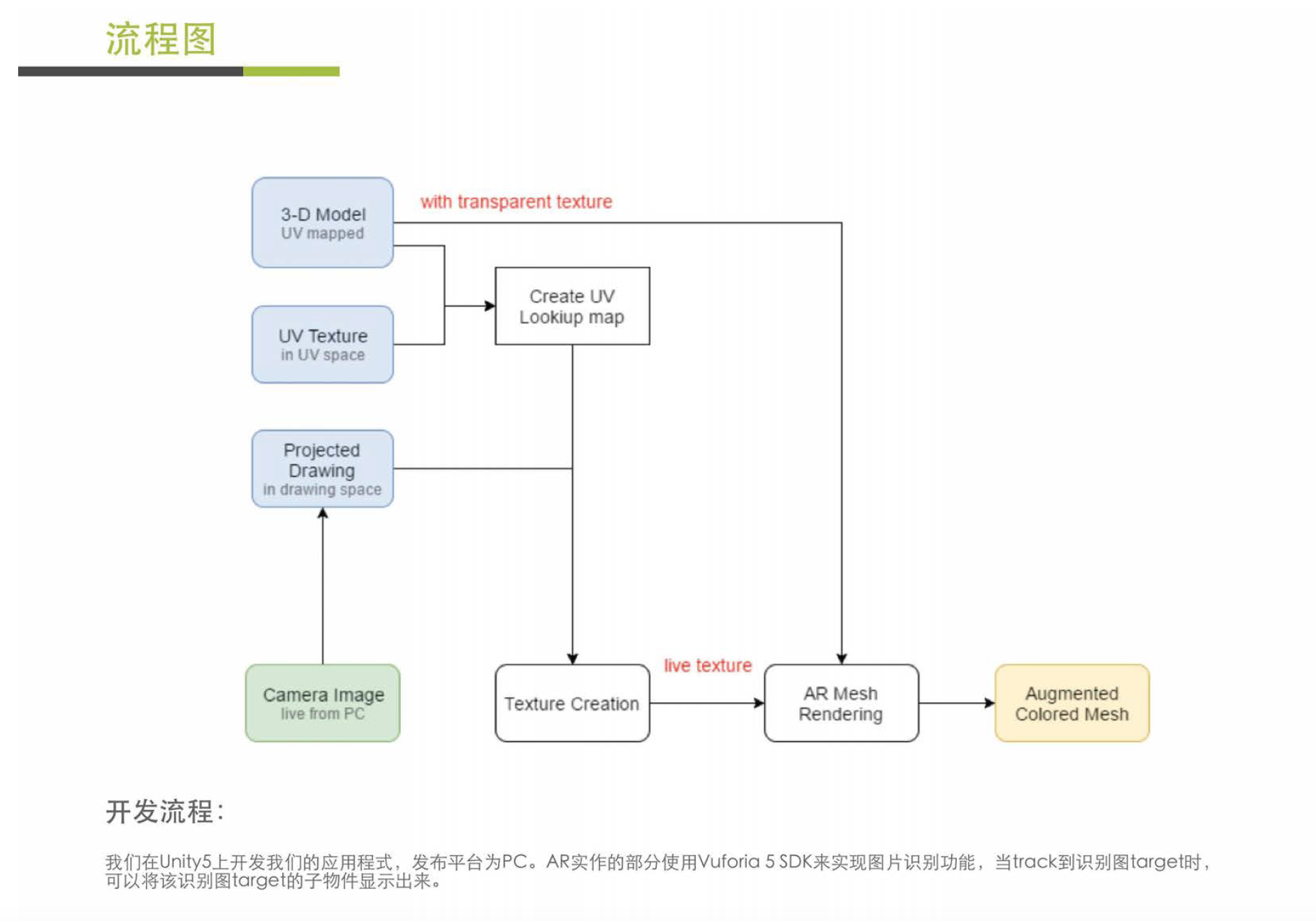

We developed this app on Unity5 and released it on the platform of PC. As for the implementation of AR, we use Vuforia 5SDK to recognize images. When the target for recognition is tracked, its sub-object will be displayed.

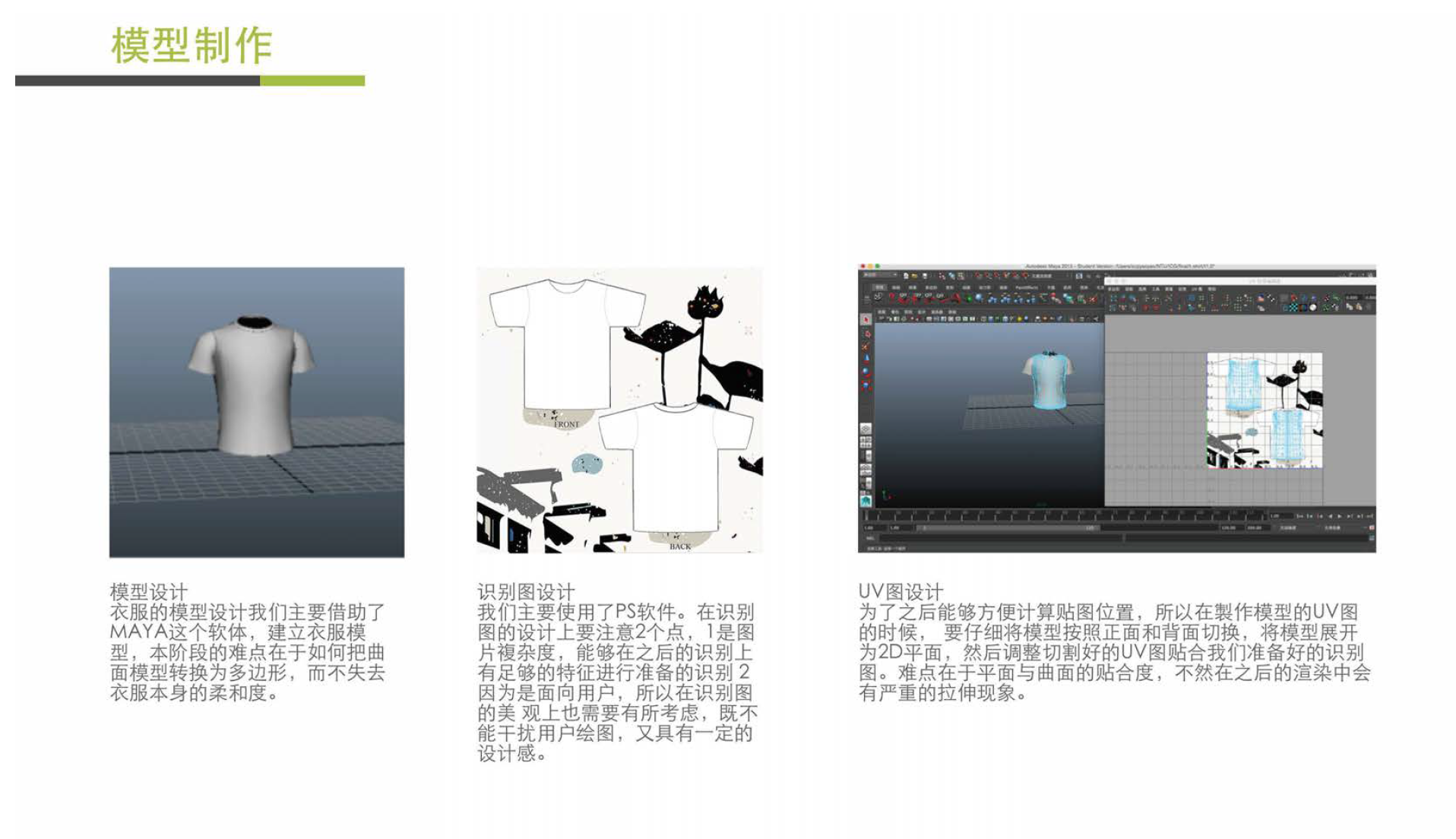

- Model Design

We mainly use MAYA to design model for clothes. The difficulty of this stage lies in the conversion of curved surface model into polygon without losing the softness of clothes.

- Recognized Image Design

We mainly use Photoshop in this stage. We pay attention to 2 points in the design of recognized image: 1. the complexity of the picture, i.e. sufficient features for subsequent recognition process; 2. visual appeal. Since the image is user-oriented, aesthetic pleasure is also a consideration. A certain sense of design is important without being interfering.

- UV Map Design

In order to calculate the position of image, the front and back side of the model should be accurately switched in UV map design. Then, unfold the model to make it two-dimensional, and adjust the screenshot of UV map to make it fit for the recognized image. In this stage, the difficulty lies in the compatibility of flat surface and curved surface. Incompatibility may cause images to be stretched disproportionately in the subsequent rendering process.

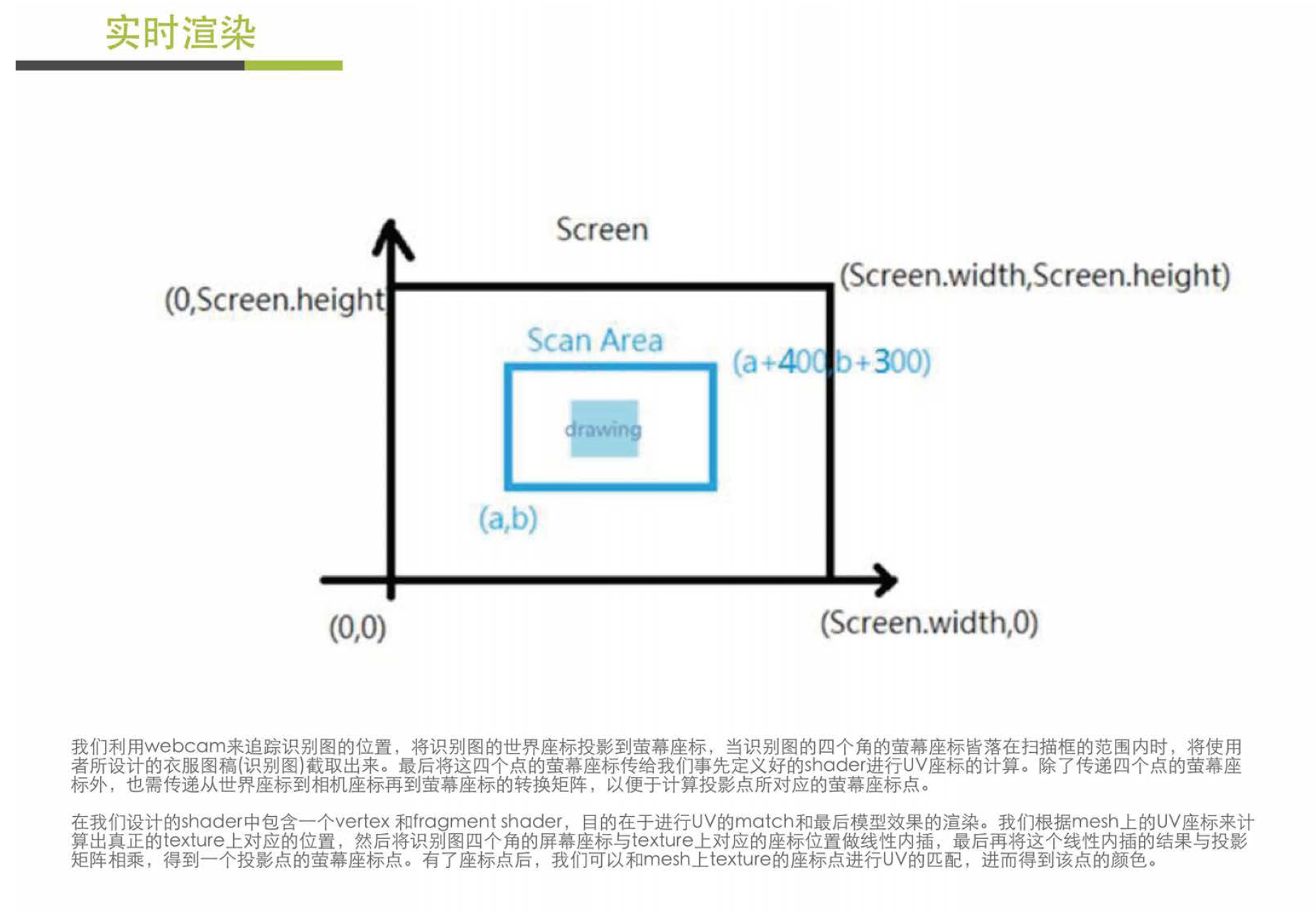

- Shader

We use the webcam to track the position of the recognized image, and project world coordinates of the recognized image to screen. When screen coordinates of its four corners fall within the scope of the scan area, take a screenshot of the clothes designed by users (recognized image), and then send screen coordinates of the four points to the pre-defined shader to calculate UV coordinates. In addition, the conversion matrix from world coordinates to camera coordinates and then to screen coordinates also needs to be sent in order to calculate corresponding screen coordinates of the projected point.

The shader contains a vertex and a fragment shader, which are designed for the match of UV and for the final rendering of the model. We calculate corresponding position on the real texture according to UV coordinates on the mesh, and then do linear interpolation with four screen coordinates of the recognized image and the corresponding coordinates on the texture. Finally, we multiply the result of linear interpolation by the projection matrix and get screen coordinates of the projection point. We match it with texture’s coordinates on the mesh and get the color of the point.

Results

Results

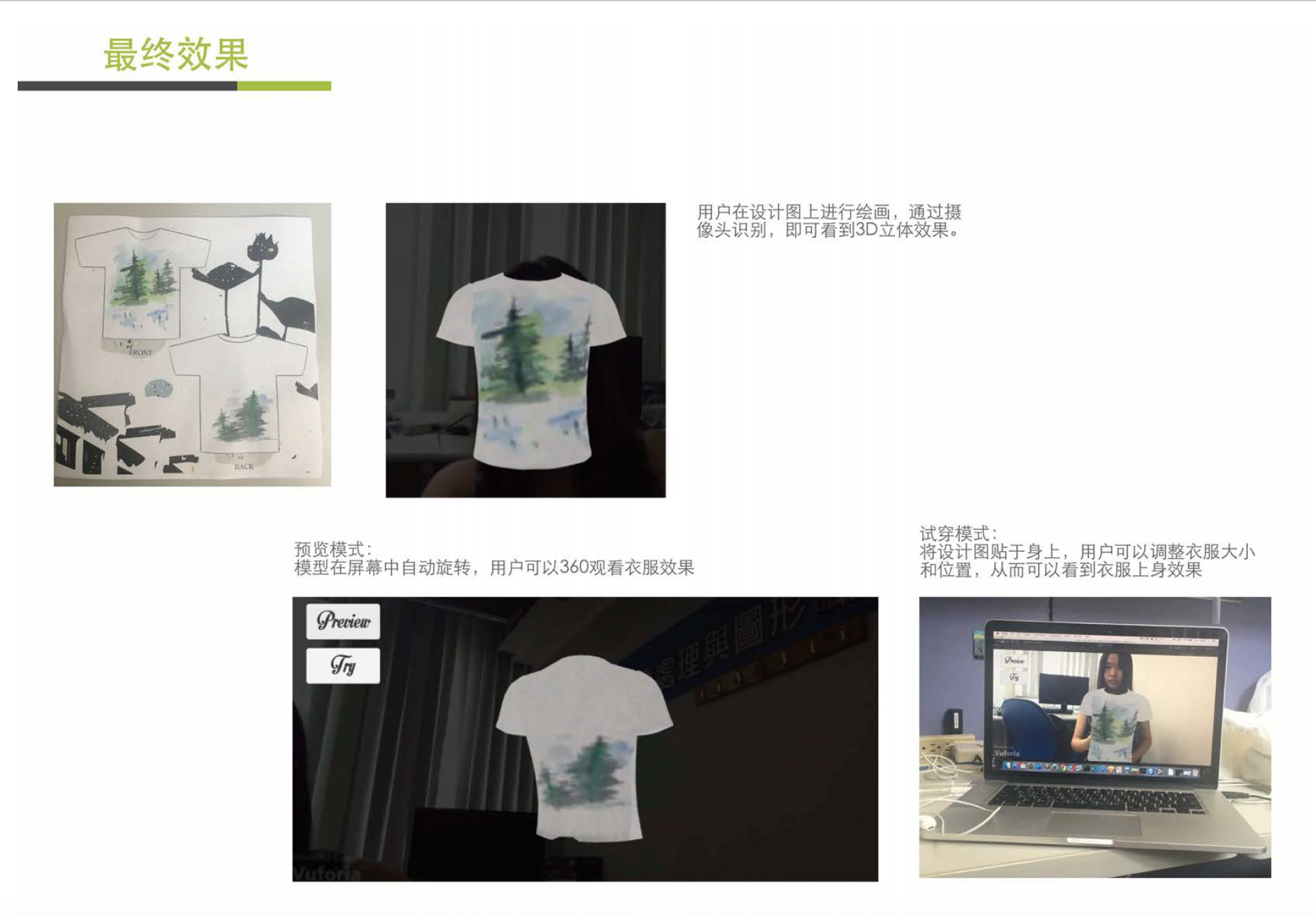

Users draw pictures on paper and view its 3D version in real-time through image recognition by camera.

Preview mode: the model automatically rotates in the screen and users can have a 360-degree view of the clothes.

Fitting mode: users hold the drawing close to their bodies and adjust its size and position, so they can have an idea of how the clothes look like on their bodies.